Summary

The Constraint Most Teams Miss

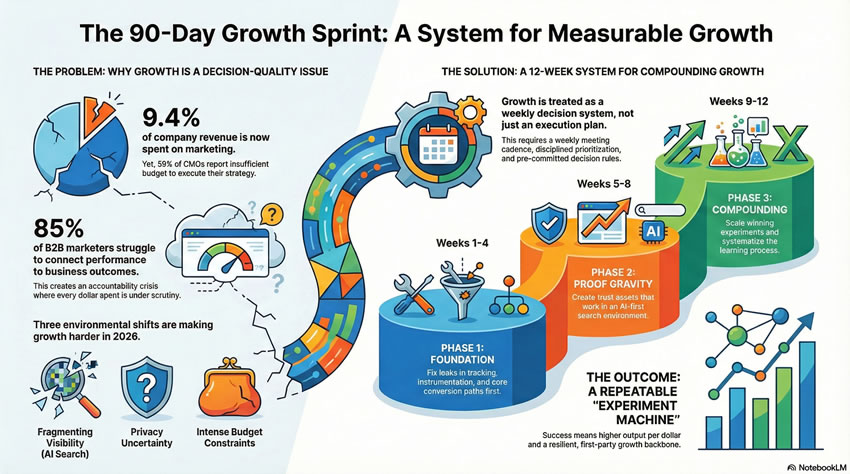

Today, growth is not a creativity problem. It is a decision-quality problem. Three structural forces have converged to expose a gap most revenue teams have not yet named: the gap between motion and learning. Teams run campaigns. They generate reports. They hold meetings. But they rarely make defensible decisions based on evidence.

The pattern is consistent across enterprise and mid-market organizations. Activity compounds without direction. Budgets increase without corresponding gains in velocity or efficiency.

Consider the numbers. Marketing budgets reached 9.4% of company revenues in 2025, up from 7.7% the year prior. Yet 59% of CMOs report insufficient budget to execute their strategy (CMO Survey, 2025). This is not a funding constraint. It is a coherence failure.

Meanwhile, 85% of B2B marketers struggle to connect marketing performance to business outcomes, creating an accountability crisis that puts every dollar under scrutiny.

The only durable advantage remaining is learning velocity: how fast your team forms smart hypotheses, runs clean experiments, and makes defensible decisions week after week.

That is what this 90-day sprint delivers.

The Governing Concept: Growth as a Weekly Decision System

Most growth plans fail because they treat execution as the hard part. The actual constraint is decision integrity.

A real sprint has four non-negotiables:

- A weekly growth meeting cadence with a standard agenda (metrics review, lessons learned, experiment selection, pipeline health).

- A disciplined prioritization method so you do not select experiments based on the loudest opinion in the room.

- A pre-committed decision rule for every test (what counts as win, lose, or needs more data).

- A bias toward control when results are inconclusive.

This structure creates compounding learning without organizational thrash. It is how elite teams in 2026 outpace competitors despite identical budgets.

The Q1 2026 Environment: Three Structural Shifts

A modern sprint must target growth constraints created by today’s specific environment.

Shift 1: Visibility Is Fragmenting Across Answer Engines

AI Overviews suppress clicks and shift value from ranking to being referenced. Multiple studies across 2024 and 2025 cite meaningful CTR declines when AI summaries appear.

This means experiments must measure not just traffic, but qualified actions and downstream pipeline. Optimize for quotability: create answer blocks, comparison tables, and provable claims that AI systems can cite. Track impressions separately from qualified actions per visit to understand true engagement.

Shift 2: The Privacy Trajectory Is No Longer Linear

Google adjusted its approach to third-party cookies and Privacy Sandbox plans, making the ecosystem less predictable than the old date-certain deprecation narrative.

Your sprint should reduce dependency on brittle tracking by strengthening first-party conversion paths, modeled attribution, and CRM hygiene. Companies allocating 11.2% of digital budgets to first-party data initiatives expect this to reach 15.8% by 2026.

Shift 3: Budget Constraints Force Ruthless Prioritization

Despite budgets reaching 9.4% of revenue, flat growth means the win condition has shifted from more spend to higher conversion efficiency and faster cycle-time learning.

For every $92 spent on acquisition, only $1 goes to conversion optimization. Yet conversion rate optimization delivers measurable ROI without additional traffic. Average landing page conversion rates sit at 2.35%. Small improvements compound dramatically.

Sprint Architecture: 90 Days, 12 Experiments, One Compounding Loop

Roles: Minimum Viable Team

- Sprint Owner (Growth Lead): Runs cadence, enforces decision rules, protects focus, owns the narrative to executives.

- Analyst / Growth Ops: Instrumentation, reporting, guardrails, data quality enforcement.

- Experiment Owners: One accountable owner per test. Could be marketing, product, web, lifecycle, SDR ops. Ownership means hypothesis plus execution plus results communication.

This is not about headcount. It is about clear accountability. In small teams, one person may wear multiple hats. The structure prevents “everyone owns it so no one owns it” syndrome.

Metrics Hierarchy: What You Track, In Order

North Star Metric (choose one):

- Pipeline created (MQL to SQL progression).

- Activated users (product-led growth).

- Revenue (direct response).

- Qualified demo starts (enterprise).

Sprint Scoreboard (tracked weekly):

- Conversion rate (landing page to lead, lead to SQL).

- CAC efficiency proxy (cost per qualified action).

- Sales-accepted rate (marketing-to-sales handoff quality).

- Speed to first value (time from signup to activation).

Experiment Metrics (per test):

- One primary metric (the win condition).

- One to two guardrails (ensure you did not break something else).

Example: Primary equals demo completion rate. Guardrails: (1) lead quality score does not drop more than 5%, (2) time-to-complete does not increase more than 30%.

The 12-Week Experiment Plan

Each experiment includes: Owner, Primary Metric, Decision Rule. Customize the thresholds to your baselines. The structure is the critical element.

Decision-rule discipline: Pre-commit thresholds to prevent narrative laundering after results land. Write the success criteria before running the test.

Weeks 1 to 4: Fix the Leaks Before You Add Fuel

Growth teams commonly review tempo, delayed tests, and key lessons weekly. This front-loads foundational work that keeps the sprint from collapsing under measurement gaps and broken handoffs.

Week 1: Friction Audit and Instrumentation Patch

Owner: Growth Ops

What you are testing: Can you reliably track the top 3 landing paths, top 3 conversion events, and CRM attribution?

Primary metric: Percentage of key events captured with full attribution (target: 95% or higher).

Decision rule: Ship instrumentation fixes if event integrity reaches 95% or higher. If below 95%, delay all other experiments until this is resolved.

Tactical execution:

- Audit top 3 traffic sources (organic, paid, email) for tracking pixel firing.

- Verify CRM field mapping for utm_source, utm_campaign, utm_content.

- Test form submission to CRM record creation for lead, demo, contact.

- Document data gaps in a shared doc (becomes your Week 2 sprint backlog).

Teams skip this thinking “we already have Google Analytics.” Then Week 6 rolls around and you cannot prove which experiment drove pipeline. Do not skip the audit.

Week 2: Offer Clarity Test

Owner: Web / Content

What you are testing: Does a rewritten hero section plus CTA on your highest-traffic page improve conversion?

Primary metric: Lead or demo conversion rate.

Guardrails: Lead quality score (measured by sales-accepted rate).

Decision rule: Keep variant if CVR increases 10% with no drop in lead quality. Rollback if quality drops more than 5%.

Tactical execution:

Identify your highest-traffic landing page (likely homepage or category page).

- Rewrite hero to follow: emotion, proof, offer, action.

- Test CTA copy shifts: “you” to “me” language (shown to boost conversions 90%).

- Add whitespace around CTA button (can increase conversions 232%).

- Run A/B test for minimum 2 weeks or until statistical significance.

Personalized CTAs convert 42% more visitors than generic ones. If you have segmentation data, test personalized variants by traffic source or industry.

Week 3: Form Strategy Test

Owner: Web Ops

What you are testing: Does a shorter form (email-first or progressive profiling) increase completions without degrading lead quality?

Primary metric: Form completion rate.

Guardrails: Sales-accepted lead rate.

Decision rule: Keep if completion rate increases 15% and SAL rate does not drop more than 5%.

Tactical execution:

- Map current form fields versus CRM utilization (often 40% or more of fields are never used).

- Test three variants: (a) email only, (b) email plus company, (c) current long form.

- Use progressive profiling to ask for more info on subsequent visits.

- Implement form analytics to see drop-off by field.

Expedia increased leads 49% by removing a single field. FSAstore.com saw 53.8% increase in average sales revenue per visitor by reducing form fields. The data is unambiguous: shorter forms convert better.

Week 4: Sales Handoff SLA Test

Owner: RevOps

What you are testing: Does faster speed-to-lead contact improve SQL conversion rate?

Primary metrics: (1) Speed-to-lead median time, (2) MQL to SQL conversion rate.

Decision rule: Keep new SLA if speed improves AND SQL rate rises 5% or more.

Tactical execution:

- Audit current speed-to-lead (median and 75th percentile).

- Implement automated routing rules (round-robin, territory-based, or AI-based).

- Set SLA: respond to all demo requests within 15 minutes during business hours.

- Create fallback protocols (if rep does not respond in 15 min, escalate to manager).

- Track SLA compliance weekly in your growth meeting.

The fastest response times correlate with highest close rates. Every hour of delay reduces conversion probability. This is low-hanging fruit with massive ROI.

Weeks 5 to 8: Create Proof Gravity That Closes Faster in an AI-First World

AI summaries reduce clicks and change what visibility means. Your proof must be portable, quotable, and confidence-building even when traffic is volatile. This phase builds trust assets that work across answer engines, buyer research, and sales conversations.

Week 5: One-Page Proof Asset

Owner: Product Marketing

What you are creating: Industry-specific ROI story with top 3 objections addressed.

Primary metric: Assisted conversion rate (deals where this asset was viewed).

Decision rule: Keep if assisted CVR increases 8% versus deals without asset engagement.

Tactical execution:

- Interview 5 to 10 customers to extract quantifiable results (time saved, revenue gained, cost reduced).

- Create one-page template: Problem, Solution, Results, ROI calculation.

- Add “Addressing Common Concerns” section with top 3 objections.

- Make it downloadable (PDF) and trackable (gated or via unique links).

- Train sales to use in discovery and proposal stages.

User-generated content in SaaS increases conversions by 154%. If possible, include customer video testimonials or direct quotes with attribution.

Week 6: Comparison Page Test

Owner: Content / SEO

What you are creating: Fair, comprehensive comparison of your category alternatives.

Primary metric: Qualified actions per visit (demo requests, trial signups).

Decision rule: Keep if qualified actions per visit increases 10% compared to baseline landing pages.

Tactical execution:

- Research: What comparison searches are prospects making? (Use keyword tools, sales calls, support tickets).

- Create honest, detailed comparison: Your Product versus Competitor A versus Build-It-Yourself.

- Include comparison table with features, pricing transparency, and use cases.

- Add “When to choose X” sections for each option (builds trust through honesty).

- Optimize for featured snippets and AI answer extraction.

Buyers are doing comparison research anyway. Creating the authoritative comparison puts you in control of the narrative and builds trust. These pages tend to rank well for high-intent searches.

Week 7: Answer Block Optimization

Owner: SEO / Content

What you are creating: FAQ plus concise direct answers plus source citations optimized for AI extraction.

Primary metrics: (1) SERP visibility (impressions), (2) Qualified actions per visit.

Decision rule: Keep if impressions increase AND qualified actions per visit holds or improves.

Tactical execution:

- Identify your top 10 “how to” or “what is” queries from Search Console.

- Create structured answer blocks: Question, Direct answer (2 to 3 sentences), Expanded context, Source citations.

- Use schema markup (FAQ schema, HowTo schema) to help AI extraction.

- Make answers quotable: clear, attribution-ready, provable claims.

- Add internal links to conversion pages within answer blocks.

With AI Overviews appearing in search, your goal shifts from “get the click” to “get cited in the overview.” Answer blocks increase your chances of being referenced, building authority even when clicks decline.

Week 8: Retargeting Reset

Owner: Paid Media

What you are testing: Do objection-focused retargeting creatives outperform feature-focused ads?

Primary metric: Cost per qualified action.

Decision rule: Keep if CPQA decreases 12% or more.

Tactical execution:

- Audit current retargeting: What are you showing to bounced visitors?

- Map top 3 objections from sales calls (price, implementation time, competitor comparison).

- Create objection-focused ad variants: “Worried about implementation? See how customers go live in 2 weeks.”

- Segment retargeting by behavior: demo viewers versus pricing page viewers versus blog readers.

- Run for 2 to 3 weeks, track CPQA and conversion rate by segment.

Most retargeting is lazy: “You visited our site, here is our logo again.” Objection-focused retargeting addresses the actual reason they did not convert, dramatically improving efficiency.

Weeks 9 to 12: Scale What Worked, Then Double Down With Discipline

The final phase focuses on scaling winners and running repeat bets on your best-performing experiments. This is where compounding begins. Small wins from Weeks 1 to 8 get systematized and amplified.

Week 9: Lifecycle Win-Back Sequence

Owner: Lifecycle / Email

What you are creating: Three-email series to dormant leads addressing objections plus new proof.

Primary metric: Reactivation rate or meeting set rate.

Decision rule: Keep if meeting rate increases 10% versus standard nurture.

Tactical execution:

- Define dormant: No engagement in 90 or more days but still in target ICP.

- Email 1 (Day 0): Re-engagement hook plus what is new since they last engaged.

- Email 2 (Day 3): Address top objection plus social proof (customer story).

- Email 3 (Day 7): Final value prop plus clear CTA (demo, consultation, resource).

- Track open rate, click rate, and meeting set rate.

Email marketing delivers average ROI of $36 to $42 for every $1 spent. Win-back sequences typically see 15% to 25% reactivation if well-executed.

Week 10: Outbound Talk-Track and Asset Pairing

Owner: SDR Lead

What you are testing: Does pairing cold outreach with proof page increase meeting rate?

Primary metrics: (1) Reply rate, (2) Meeting set rate.

Decision rule: Keep if meetings increase 15% versus control.

Tactical execution:

- Rewrite SDR scripts: Move from feature-pitch to problem-identification.

- Pair with Week 5 proof asset: “Here is how [Similar Company] solved [Problem].”

- Create personalized landing page for each outreach segment.

- A/B test: Email with proof link versus email without.

- Track link engagement plus meeting conversion.

This experiment leverages your Week 5 asset. This is compounding. Earlier experiments create resources that make later experiments more effective.

Week 11: Channel Mix Micro-Shift

Owner: Demand Gen

What you are testing: What happens if you move 10% to 15% of spend from worst-performing to best-performing channel?

Primary metrics: (1) CPQA, (2) Pipeline created.

Decision rule: Keep if pipeline holds or improves while CPQA improves.

Tactical execution:

- Rank all paid channels by CPQA over last 90 days.

- Identify best (LinkedIn? Google Search?) and worst (Display? Generic programmatic?).

- Shift 10% to 15% from worst to best.

- Monitor for 3 weeks: Does best channel maintain efficiency at higher volume?

- If yes, continue scaling. If channel saturates, reallocate elsewhere.

Digital marketing budgets are projected to grow 11.9% by 2026, with 72% going to digital channels. But smart allocation matters more than total spend. Many teams waste money on channels they cannot measure.

Week 12: Repeat Winner Iteration

Owner: Growth Lead

What you are testing: Can you extract more value from your best-performing experiment (Weeks 1 to 11)?

Primary metric: Original experiment’s primary metric.

Decision rule: Keep if incremental lift persists (even if smaller than original).

Tactical execution:

- Review Weeks 1 to 11: Which experiment had the biggest impact?

- Brainstorm: What is the next iteration? (Example: If Week 2 offer test worked, test 3 more variants).

- Run improved version.

- Document learning: What patterns emerged?

The compounding principle: Great growth teams do not just run experiments. They create experiment families. Week 2’s offer test becomes Week 12’s multi-variant offer test, which becomes Q2’s AI-personalized offer engine.

Governance: How You Prevent Chaos While Moving Fast

Weekly Growth Meeting: 60 Minutes, No Exceptions

A strong cadence prevents drift. Here is the standard agenda that elite teams use:

Minutes 0 to 10: Metrics Review

North Star: Did it move? By how much?

Sprint Scoreboard: CVR, CPQA, SAL rate, speed-to-value.

Pipeline health: Are we creating enough? Is it progressing?

Minutes 10 to 25: Last Week’s Experiments

What shipped? (Be honest. Slips happen.)

What are the results? (Even if inconclusive.)

What is the decision? (Keep, kill, iterate, need more data.)

Minutes 25 to 40: Lessons Learned

What surprised us?

What patterns are we seeing?

What assumptions did we invalidate?

Minutes 40 to 55: Next Week’s Experiments

What is launching this week?

Does the owner have what they need?

Are decision rules clear?

Minutes 55 to 60: Blockers and Asks

What is stuck?

What do we need from exec team?

Record decisions in a shared doc (Notion, Confluence, Google Docs). This becomes your institutional memory, preventing you from re-litigating the same debates every quarter.

The Three Rules That Keep the Sprint Honest

Rule 1: Write the Hypothesis in Plain Language

Format: “We believe that [change] will cause [effect] because [reason]. We will measure this by [metric].”

Example: “We believe that reducing form fields from 7 to 3 will increase completion rate by 15% because buyers cite ‘too much effort’ in exit surveys. We will measure this by tracking form completion rate and SAL rate as a guardrail.”

Rule 2: If Results Are Inconclusive, Control Wins

Inconclusive means: (a) not statistically significant, (b) primary metric moved but guardrails failed, or (c) mixed signals you cannot explain.

Default to the control. Change has a cost (engineering time, design time, political capital). Do not pay that cost for ambiguous returns.

Rule 3: Store Learnings in a Searchable Knowledge Base

Create a central repository (Notion, Confluence, Airtable) where every experiment is documented:

- Hypothesis.

- Metrics and decision rule.

- Results (quantitative).

- Learnings (qualitative).

- Decision (keep, kill, iterate).

Teams without institutional memory pay tuition twice, running the same failed experiment 18 months later because no one remembers it did not work.

What Success Looks Like at Day 90

A real sprint delivers more than a lift in metrics. It creates an operating advantage that compounds over time.

A Repeatable Experiment Machine

You have proven you can ship 12 experiments in 12 weeks with clear decision rules. This tempo becomes your new baseline, not a one-time sprint.

A Stronger First-Party Growth Backbone

With better instrumentation, conversion paths, and CRM hygiene, you are less dependent on fragile third-party tracking. This resilience matters as privacy regulations tighten.

A Proof System That Converts Under Answer-First Search Dynamics

Your comparison pages, answer blocks, and proof assets work across AI search, traditional search, and sales conversations. This creates proof gravity that pulls buyers toward you.

Higher Output Per Dollar

Because budgets remain constrained (59% of CMOs report insufficient funds despite budget growth to 9.4% of revenue), the win condition is efficiency. You are converting more of your existing traffic, reducing CPQA, and improving sales velocity, all without adding spend.

The Board-Grade Sprint Dashboard: One Slide

If you want this sprint to read like a Gartner or Forrester operating model, your executive dashboard should contain exactly these elements (and nothing more):

- North Star Metric: Weekly trend line showing pipeline created, activated users, revenue, or qualified demos.

- Experiment Tempo: Launched versus goal (e.g., 12 of 12 experiments shipped).

- Win Rate: Wins divided by total experiments (healthy range: 30% to 50%).

- Compounding Assets Shipped: Count of proof pages, comparisons, calculators, sequences now in production.

- Pipeline Health: Created plus stage velocity (MQL to SQL to Opportunity).

Five metrics. One slide. Executives trust this format because it is measurable, explainable, and decision-linked.

From Plans to Systems

Most growth plans are aspiration documents. PDFs that get presented once and then live in Google Drive graveyards. This sprint is different because it is a system, not a plan.

- A system has tempo: weekly cadence that compounds.

- A system has decision rules: pre-committed thresholds that prevent politics.

- A system has accountability: clear ownership at the experiment level.

- A system has memory: institutional knowledge that prevents re-paying tuition.

In 2026, where budgets are constrained despite reaching 9.4% of revenue, buyers are self-directed (spending only 17% of time with vendors per Gartner), and search is becoming answer-first, the only sustainable advantage is learning velocity.

Teams that learn faster compound their advantages. Teams that learn slower fall behind, regardless of budget size.

This 90-day sprint gives you the structure to learn fast, decide clearly, and compound ruthlessly.

Execute it, and you will not just have better metrics at day 90. You will have a machine that produces better metrics every quarter.

References

- CMO Survey. (2025). Marketing Spending Insights. Available at: https://cmosurvey.org/

- Forrester Research. (2025). B2B Marketing Investment Trends 2026. Available at: https://www.forrester.com/

- Gartner. (2025). B2B Buying Behavior Report. Available at: https://www.gartner.com/

- Gartner. (2025). CMO Spend Survey: Digital Marketing Budget Projections. Available at: https://www.gartner.com/